Imagine having a super-powered contract review assistant, able to rapidly comb through thousands of pages in record time to flag key clauses, risks, and insights. That’s the promise of Legal LLMs, generative AI large language models: a highly advanced predictive text system with specialized training in a legal context. For in-house legal teams, these tools accelerate the review of contracts, invoices, and legal service requests by eliminating attorneys needing to pore through mountains of paperwork and emails manually. That’s why AI adoption is surging for these document-intensive tasks that frequently overwhelm in-house legal professionals.

Artificial Intelligence (AI) broadly refers to computer systems capable of tasks requiring human intelligence like visual perception, speech recognition, and decision-making. Machine learning is a specific subfield within AI where algorithms improve through experience without explicit programming. Rather, the AI is trained use a representative dataset. The neural network is a common machine learning structure, inspired by the human brain’s interconnectivity.

A significant AI area utilizing machine learning is Natural Language Processing (NLP), which focuses on automating language understanding and generation. NLP employs neural networks trained on vast text data. Generative AI represents an advanced subset of NLP models called Large Language Models (LLMs) designed to produce human-like text. So, while not all AI uses machine learning, modern innovations like large language models leverage machine learning and neural networks to achieve their natural language capabilities.

This brings us to recent advancements in generative AI and the advent of Large Language Measures (LLMs), which have driven much of the recent excitement around AI applications in the legal field. These are specialized neural networks trained on vast amounts of text data, designed to understand and generate text.

What are Large Language Models?

Large language models (LLMs) like ChatGPT are trained on massive datasets of billions of data points, refined through human feedback loops of prompts and responses. This allows LLMs to break down text into tokens — commonly occurring groups of 4-5 characters – that are encoded as parameters. When you provide a prompt, the LLM uses that context to statistically predict the most likely sequence of tokens to generate a coherent response, like an advanced autocorrect.

However, LLMs have limitations. They don’t learn or understand content — they generate plausible responses using their parameters but don’t comprehend meaning. LLMs have restricted context windows, limiting how much text they can process, require substantial computational resources, and struggle with math or numbers. Poor data quality or biased prompts can result in inaccurate outputs. While LLMs can produce human-like text, they don’t innately understand language semantics. LLMs are powerful but require thoughtful prompts and oversight to mitigate risks. Setting realistic expectations by understanding how they leverage statistical patterns rather than true comprehension allows appropriate usage for augmenting legal work while providing necessary guidance and validation.

Challenges and Common Issues with (Legal) LLMs

While large language models represent a breakthrough innovation, they have inherent limitations requiring prudent risk management. As static systems, LLMs cannot continuously adapt on the fly post-training. Their memory capacity, or “context windows,” vary widely. More limited windows constrain the processing of lengthy content. State-of-the-art models boast expansive context but are still pale compared to human memory.

More concerningly, LLMs have several key issues that warrant caution:

- Hallucinations: LLMs may generate or “hallucinate” data not present in reality, as they are optimized to respond to prompts without the ability to discern truth from fiction. This tendency to produce false information, incredibly confidently stated, is concerning and requires oversight.

- Biases: The training data may contain societal biases encoded into the LLM’s parameters. Additionally, reinforcement learning through human feedback loops during training can further ingrain biases. Once deployed, even prompt wording can introduce biases that lead to unfair LLM responses.

- Inconsistency: Due to the statistical nature of how LLMs generate each token and the inherent randomness built into models to enable creative responses, LLMs do not always take the same path to respond to identical prompts. So, you cannot rely on consistent output, even adjusting for creativity settings.

- Misalignment: LLMs have demonstrated some awareness of when their outputs are being evaluated or tested and can provide responses that diverge from a user’s true intent. This makes it challenging to understand alignment with user goals outside of testing scenarios thoroughly.

Informed perspectives on LLMs’ capabilities and limitations allow full utilization of their transformative potential through responsible oversight. Their breakthrough innovation warrants measured adoption to realize possibilities ethically.

Realizing the Benefits of Legal LLMs & Generative AI While Mitigating the Risks

Generative AI has huge potential upsides for legal teams if thoughtfully applied. But we need to be realistic — Legal LLMs aren’t going to completely replace your skills and judgment overnight. Rather, they can take the grunt work off your plate so you can focus on high-value tasks like strategy, analysis, and client needs.

Before turning LLMs loose, comprehensive testing and review by real experts is crucial. We can’t just immediately take what LLMs spit out as gospel truth. Their output needs real validation via ongoing review. LLMs should collaborate alongside professionals, not try to substitute your judgment that’s sharpened through experience.

It’s also critical to regularly audit for biases, inconsistencies, or false info. The teams behind LLMs must take responsibility for thoughtfully addressing these risks head-on. Rigorous data governance, privacy protection, and cybersecurity are essentials, too. We need systems we can understand, not opaque “black boxes” that undermine trust.

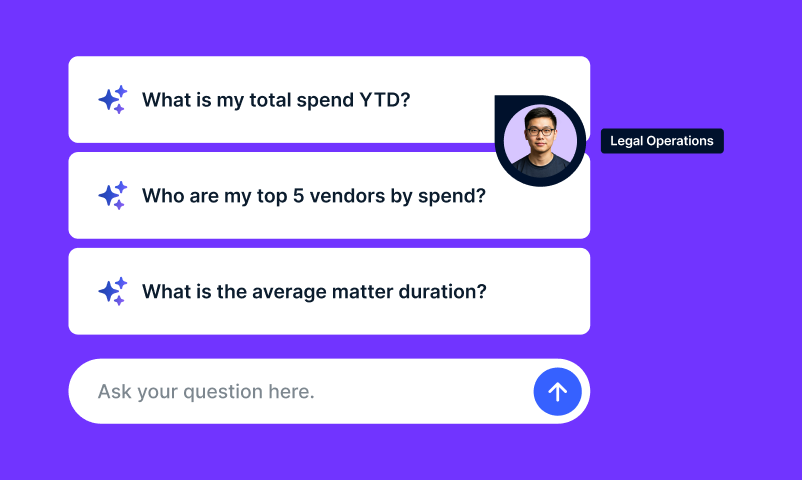

LLMs can uniquely supercharge vital legal work:

- They can rapidly pinpoint the most relevant info for document review out of massive document troves, saving tons of time over lawyers pouring over everything manually. But human oversight still matters to double-check what the LLM flags and catch subtleties it might miss.

- For analyzing contracts, LLMs can efficiently unpack dense legalese to surface issues like inconsistencies or missing pieces for tightening before signing. But niche clauses unique to certain deals might get overlooked. Experts still need to verify that nothing big slipped through the cracks.

- LLMs shine at legal research, promptly finding past precedents, citations, and case law to build persuasive arguments. However, they might miss seminal cases only seasoned attorneys would know; your guidance remains key for strategy.

- LLMs can also assist organizations in the creation of legal service requests and invoice summaries, helping to ensure a more streamlined workflow, saving valuable time, and bringing clarity to collection processes. Human oversight, however, is still essential to ensure crucial elements are included and that requests and summaries get to the right people or departments.

Navigating the Ethical Frontier

Implementing new technologies for a legal team requires prudence to uphold core values like transparency, fairness, and accountability, considering the potential risks and rewards tied to distinct AI models.

While AI promises benefits like efficiency and insights, particularly in routine tasks like contract review, it is imperative to distinguish between consumer models and enterprise solutions of generative AI. Consumer models, like ChatGPT through OpenAI, a version provided through Microsoft, and others provided through Google, are accessible but pose significant data privacy concerns that are unacceptable for legal professionals. Such models may use confidential client data for future training or other purposes, potentially exposing sensitive information inadvertently.

In stark contrast, enterprise solutions offer robust data protection essential for in-house teams. These commercial models assure that client data won’t be used in future model training, nor will the results be shared or misused. This safeguard is pivotal for in-house legal professionals who handle confidential information daily and must assure clients and internal stakeholders about data security. Hence, in-house legal teams should avoid using consumer-level AI models to prevent compromise on client data privacy.

With these distinctions in mind, in-house legal teams must consider the following when evaluating AI solutions for integration into workflows like contract review and legal invoice examination:

- Explainability: In-house legal professionals should require AI providers to disclose the inner workings of their systems. Understanding how recommendations are generated is crucial to fostering trust in AI outputs and preventing reliance on opaque “black box” systems unsuitable for legal work.

- Accountability: Despite AI’s efficiency in reviewing contracts and invoices, in-house lawyers must still thoroughly vet AI outputs, establishing clear oversight procedures without mindlessly following AI-generated advice. Human oversight remains essential.

- Fairness: Ensuring AI is developed without biases is essential to uphold legal principles. Continuous monitoring and assessment during both the development and production phases are necessary to sustain fairness.

- Transparency: In-house teams need to be transparent about their AI usage with clients and courts, clearly communicating the chosen AI’s capabilities and limitations.

- Risk Assessment: Identify and mitigate potential harms, like biases, security flaws, or loss of professional judgment, early when assessing AI solutions for integration into workflows.

The sweet spot is thoughtfully harnessing AI’s power while mitigating risks through governance, security, testing, and expertise-based oversight. This balanced approach lets us ethically integrate AI into legal work to augment your talent.

Ready to learn more about how you can integrate AI into your Legal workflows? Download our full eBook entitled The Legal Professional’s Handbook: Generative AI Fundamentals, Prompting, and Applications.